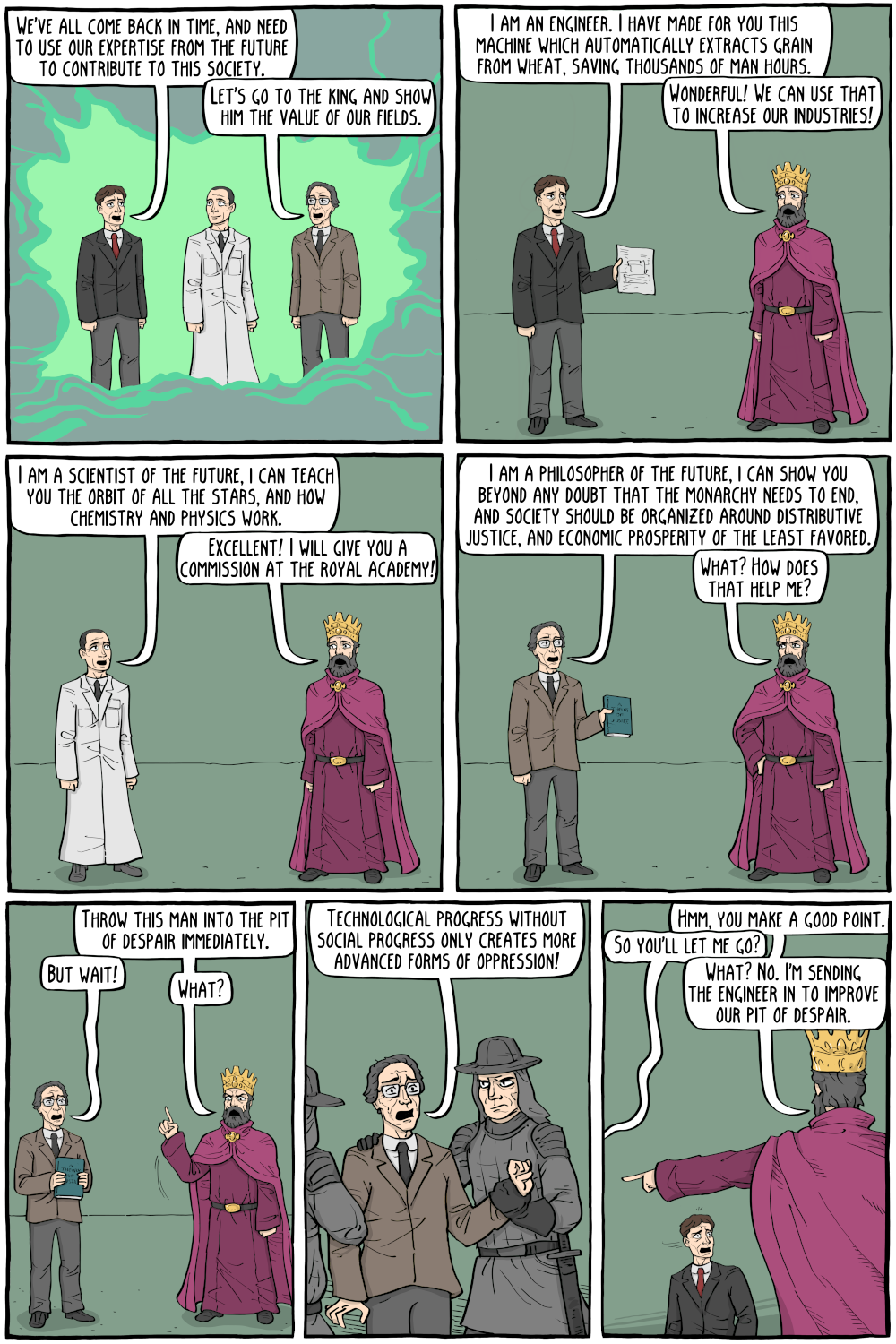

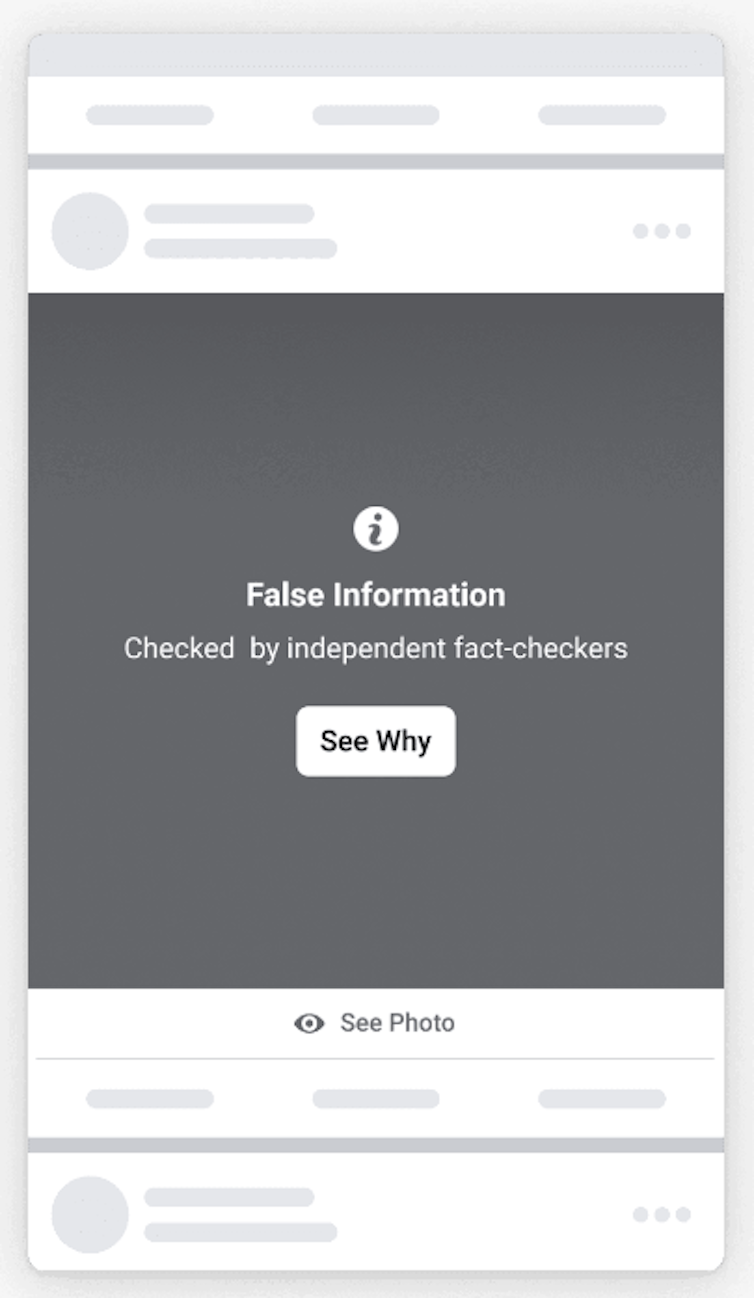

Meta founder and CEO Mark Zuckerberg has announced big changes in how the company addresses misinformation across Facebook, Instagram and Threads. Instead of relying on independent third-party factcheckers, Meta will now emulate Elon Musk’s X (formerly Twitter) in using “community notes”. These crowdsourced contributions allow users to flag content they believe is questionable.

Zuckerberg claimed these changes promote “free expression”. But some experts worry he’s bowing to right-wing political pressure, and will effectively allow a deluge of hate speech and lies to spread on Meta platforms.

Research on the group dynamics of social media suggests those experts have a point.

At first glance, community notes might seem democratic, reflecting values of free speech and collective decisions. Crowdsourced systems such as Wikipedia, Metaculus and PredictIt, though imperfect, often succeed at harnessing the wisdom of crowds — where the collective judgement of many can sometimes outperform even experts.

Research shows that diverse groups that pool independent judgements and estimates can be surprisingly effective at discerning the truth. However, wise crowds seldom have to contend with social media algorithms.

Many people rely on platforms such as Facebook for their news, risking exposure to misinformation and biased sources. Relying on social media users to police information accuracy could further polarise platforms and amplify extreme voices.

Two group-based tendencies — our psychological need to sort ourselves and others into groups — are of particular concern: in-group/out-group bias and acrophily (love of extremes).

Ingroup/outgroup bias

Humans are biased in how they evaluate information. People are more likely to trust and remember information from their in-group — those who share their identities — while distrusting information from perceived out-groups. This bias leads to echo chambers, where like-minded people reinforce shared beliefs, regardless of accuracy.

It may feel rational to trust family, friends or colleagues over strangers. But in-group sources often hold similar perspectives and experiences, offering little new information. Out-group members, on the other hand, are more likely to provide diverse viewpoints. This diversity is critical to the wisdom of crowds.

But too much disagreement between groups can prevent community fact-checking from even occurring. Many community notes on X (formerly Twitter), such as those related to COVID vaccines, were likely never shown publicly because users disagreed with one another. The benefit of third-party factchecking was to provide an objective outside source, rather than needing widespread agreement from users across a network.

Worse, such systems are vulnerable to manipulation by well organised groups with political agendas. For instance, Chinese nationalists reportedly mounted a campaign to edit Wikipedia entries related to China-Taiwan relations to be more favourable to China.

Political polarisation and acrophily

Indeed, politics intensifies these dynamics. In the US, political identity increasingly dominates how people define their social groups.

Political groups are motivated to define “the truth” in ways that advantage them and disadvantage their political opponents. It’s easy to see how organised efforts to spread politically motivated lies and discredit inconvenient truths could corrupt the wisdom of crowds in Meta’s community notes.

Social media accelerates this problem through a phenomenon called acrophily, or a preference for the extreme. Research shows that people tend to engage with posts slightly more extreme than their own views.

These increasingly extreme posts are more likely to be negative than positive. Psychologists have known for decades that bad is more engaging than good. We are hardwired to pay more attention to negative experiences and information than positive ones.

On social media, this means negative posts – about violence, disasters and crises – get more attention, often at the expense of more neutral or positive content.

Those who express these extreme, negative views gain status within their groups, attracting more followers and amplifying their influence. Over time, people come to think of these slightly more extreme negative views as normal, slowly moving their own views toward the poles.

A recent study of 2.7 million posts on Facebook and Twitter found that messages containing words such as “hate”, “attack” and “destroy” were shared and liked at higher rates than almost any other content. This suggests that social media isn’t just amplifying extreme views — it’s fostering a culture of out-group hate that undermines the collaboration and trust needed for a system like community notes to work.

The path forward

The combination of negativity bias, in-group/out-group bias and acrophily supercharges one of the greatest challenges of our time: polarisation. Through polarisation, extreme views become normalised, eroding the potential for shared understanding across group divides.

The best solutions, which I examine in my forthcoming book, The Collective Edge, start with diversifying our information sources. First, people need to engage with — and collaborate across — different groups to break down barriers of mistrust. Second, they must seek information from multiple, reliable news and information outlets, not just social media.

However, social media algorithms often work against these solutions, creating echo chambers and trapping people’s attention. For community notes to work, these algorithms would need to prioritise diverse, reliable sources of information.

While community notes could theoretically harness the wisdom of crowds, their success depends on overcoming these psychological vulnerabilities. Perhaps increased awareness of these biases can help us design better systems — or empower users to use community notes to promote dialogue across divides. Only then can platforms move closer to solving the misinformation problem.

Colin M. Fisher does not work for, consult, own shares in or receive funding from any company or organisation that would benefit from this article, and has disclosed no relevant affiliations beyond their academic appointment.

Oh fuck off. This is like blaming individuals for the obesity crisis instead of addressing the root causes behind systemic problems like food deserts and the sedentary lifestyles of modern office jobs.

Expecting *everyone* to know and act on individual fixes is not going to work. The problem is the platforms being owned by billionaires.

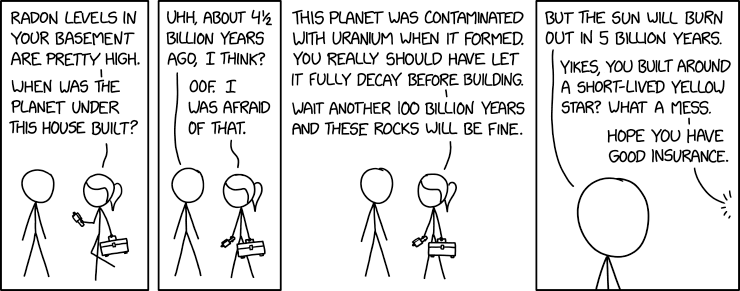

https://en.wikipedia.org/wiki/Earth%27s_internal_heat_budget#Heat_and_early_estimate_of_Earth's_age

The part where it took 800 million years of before life evolved something to do with all that toxic oxygen that those damn cyanobacteria were producing was a particularly difficult hurdle.

https://web.archive.org/web/20130124200735/https://www.patheos.com/blogs/daylightatheism/2009/02/bands-of-iron/

Funny how that one never pops up in discussions of "great filters" - the Sun is 4.6 billion years, will burn out in another 5. Life spent almost 10% of that time repeatedly almost killing itself before some life form figured out how to use oxygen. What if that's the part where we got lucky, and that on average most habitable planets get stuck in this self-destructive oxygen cycle?

https://en.wikipedia.org/wiki/Great_Filter

Meta has announced it will abandon its fact-checking program, starting in the United States. It was aimed at preventing the spread of online lies among more than 3 billion people who use Meta’s social media platforms, including Facebook, Instagram and Threads.

In a video, the company’s chief, Mark Zuckerberg, said fact checking had led to “too much censorship”.

He added it was time for Meta “to get back to our roots around free expression”, especially following the recent presidential election in the US. Zuckerberg characterised it as a “cultural tipping point, towards once again prioritising speech”.

Instead of relying on professional fact checkers to moderate content, the tech giant will now adopt a “community notes” model, similar to the one used by X.

This model relies on other social media users to add context or caveats to a post. It is currently under investigation by the European Union for its effectiveness.

This dramatic shift by Meta does not bode well for the fight against the spread of misinformation and disinformation online.

Independent assessment

Meta launched its independent, third-party, fact-checking program in 2016.

It did so during a period of heightened concern about information integrity coinciding with the election of Donald Trump as US president and furore about the role of social media platforms in spreading misinformation and disinformation.

As part of the program, Meta funded fact-checking partners – such as Reuters Fact Check, Australian Associated Press, Agence France-Presse and PolitiFact – to independently assess the validity of problematic content posted on its platforms.

Warning labels were then attached to any content deemed to be inaccurate or misleading. This helped users to be better informed about the content they were seeing online.

A backbone to global efforts to fight misinformation

Zuckerberg claimed Meta’s fact-checking program did not successfully address misinformation on the company’s platforms, stifled free speech and lead to widespread censorship.

But the head of the International Fact-Checking Network, Angie Drobnic Holan, disputes this. In a statement reacting to Meta’s decision, she said:

Fact-checking journalism has never censored or removed posts; it’s added information and context to controversial claims, and it’s debunked hoax content and conspiracy theories. The fact-checkers used by Meta follow a Code of Principles requiring nonpartisanship and transparency.

A large body of evidence supports Holan’s position.

In 2023 in Australia alone, Meta displayed warnings on over 9.2 million distinct pieces of content on Facebook (posts, images and videos), and over 510,000 posts on Instagram, including reshares. These warnings were based on articles written by Meta’s third-party, fact-checking partners.

Numerous studies have demonstrated that these kinds of warnings effectively slow the spread of misinformation.

Meta’s fact‐checking policies also required the partner fact‐checking organisations to avoid debunking content and opinions from political actors and celebrities and avoid debunking political advertising.

Fact checkers can verify claims from political actors and post content on their own websites and social media accounts. However, this fact‐checked content was still not subject to reduced circulation or censorship on Meta platforms.

The COVID pandemic demonstrated the usefulness of independent fact checking on Facebook. Fact checkers helped curb much harmful misinformation and disinformation about the virus and the effectiveness of vaccines.

Importantly, Meta’s fact-checking program also served as a backbone to global efforts to fight misinformation on other social media platforms. It facilitated financial support to up to 90 accredited fact-checking organisations around the world.

What impact will Meta’s changes have on misinformation online?

Replacing independent, third-party fact checking with a “community notes” model of content moderation is likely to hamper the fight against misinformation and disinformation online.

Last year, for example, reports from The Washington Post and The Centre for Countering Digital Hate in the US found that X’s community notes feature was failing to stem the flow of lies on the platform.

Meta’s turn away from fact checking will also create major financial problems for third-party, independent fact checkers.

The tech giant has long been a dominant source of funding for many fact checkers. And it has often incentivised fact checkers to verify certain kinds of claims.

Meta’s announcement will now likely force these independent fact checkers to turn away from strings-attached arrangements with private companies in their mission to improve public discourse by addressing online claims.

Yet, without Meta’s funding, they will likely be hampered in their efforts to counter attempts to weaponise fact checking by other actors. For example, Russian President Vladimir Putin recently announced the establishment of a state fact-checking network following “Russian values”, in stark difference to the International Fact-Checking Network code of principles.

This makes independent, third-party fact checking even more necessary. But clearly, Meta doesn’t agree.

Michelle Riedlinger has received funding from Meta’s 2022 Foundational Integrity Research granting scheme.

Silvia Montaña-Niño has received funding from Meta’s 2022 Foundational Integrity Research granting scheme.

Ned Watt does not work for, consult, own shares in or receive funding from any company or organisation that would benefit from this article, and has disclosed no relevant affiliations beyond their academic appointment.